Like everyone else, I have been captivated by the capability of the ChatGPT AI. I have been trying to think through the potential uses in my work. For controversial development projects or public policies we often have public hearings at which hundreds of people provide comments. Even with a transcript of the comments, it is often frustratingly difficult to DO ANYTHING with the comments because it is just overwhelmingly too much text.

Sometimes some poor intern is given the task of going through all the comments and creating a summary. I wanted to see if OpenAI’s GPT3.5 AI could do this job.

To test the idea, I used public comments collected by BART during a public meeting to select a development team for a housing project at the site of the North Berkeley BART station. BART had two potential development teams present to the public and then they asked members of the public to complete an online survey. Each person provided 1-5 ratings on several factors and also had a chance to provide richer feedback in an open ended text box. The scoring results were immediately useful but the detailed text feedback is much harder to make use of.

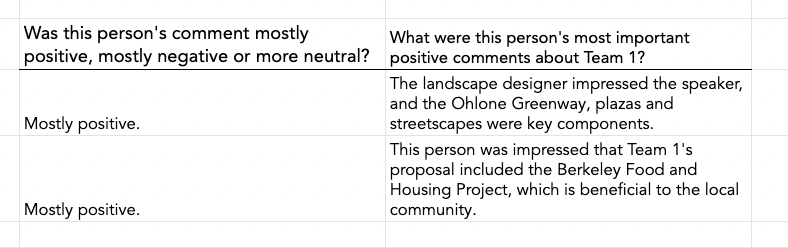

The combination of numeric scores and text created an opportunity to test the AI’s ability to evaluate sentiment from open ended text (which might not be so conveniently accompanied by scores in other contexts).

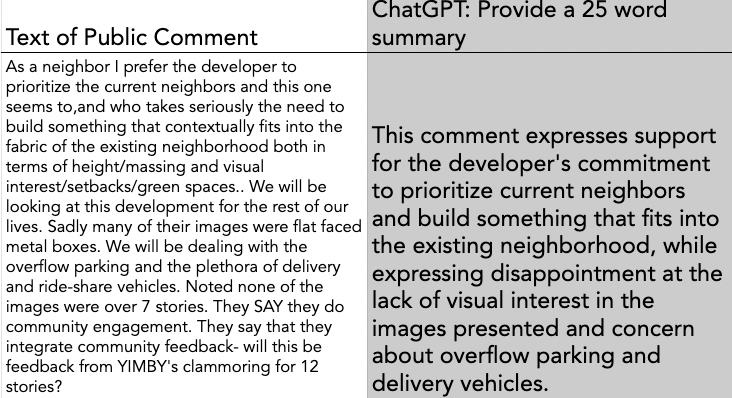

Following a methodology and very simple code published on twitter by Shubhro Saha, I set up a google sheet with BART’s published data linked to the OpenAI API (text-davinci-003). The spreadsheet has a column with the text comments from users – one per row. I created a new column that used Saha’s code to feed the user’s comments to GPT and return a 25 word summary of each comment. The result was stunning.

Open AI’s GPT 3.5 AI illustrates the potential for AI to review and analyze open ended public comments including the ability to make potentially complex judgements about the content of those comments.

With some exceptions, the results were both more accurate and more readable than what is generally produced when humans are assigned this same task. And even when humans are able to do it accurately, this is actually very hard and time-consuming work. OpenAI performed the task instantly and at a cost well under $1. Now, the pricing could change, but I don’t see a reason to think it would.

The glaring exception was that in cases where the person provided no text comment (ie. Where the comment field was blank), the AI simply made up a summary of an imaginary comment. These summaries were very consistent with the kinds of things that people typically say in meetings like this but were NOT based on actual things that real people said in this particular forum. While this seems like weird behavior, it is a well understood aspect of large language AI models like ChatGPT. What the AI is doing is not really answering questions, it is predicting the most likely text that would complete a query. When we feed it a public comment, the most likely summary is one that fairly accurately matches that comment. But when we feed it nothing, the most likely summary seems to be based on typical comments instead of any one actual comment. In the absence of real data, the model bullshits! And it does so very convincingly. Someone reading the summaries and ignoring the full comments would never guess that these blank line summaries were false.

It is easy enough to fix this specific problem by not asking for summaries when the comment field is blank, but it points to a much more pervasive reliability challenge. This is the reason that Open AI says that the AI should not yet be used for tasks that really matter. There is simply no way to know when it is bullshitting us.

Nonetheless, I think that the summaries it produces from the actual comments were valuable enough to be very helpful right now. Compared to having no summary of this important feedback, having a summary with some degree of unsupported embellishment is much better than nothing.

But the tool appears to be capable of much more than simple summarization. In addition to summarizing, I asked OpenAI to consider whether each comment was more positive, negative or neutral and again it did an impressive job. The model accurately picks up on subtle clues about whether a comment is supportive or critical. Similarly, it was able to provide separate summaries of positive vs negative comments. If a commenter mentioned both pros and cons of the development team’s proposal, the model was able to pull them apart. This enables us to craft a master summary that highlights the range of positive comments and also the range of concerns that the community raised in their comments without manually reading each one. It also seems like we could easily create an index of which comments referenced which aspects of the team or their proposal without relying on keywords. Ie. we could easily ask whether a comment referenced building height or density and expect it to flag comments that mention how many stories a building was even if they didn’t use the specific words “height” or “density.”

In some ways the most impressive thing about this experiment was that I was able to query the AI in plain English. The script I downloaded simply takes any input text and feeds it to OpenAI and returns the text that the GPT3.5 model provides. I didn’t have to learn any coding or script or read any API manual. I didn’t have to carefully construct queries in some obscure format. I just fed it the user’s comment and added “is this a positive comment?” and it returned an answer that was nearly always the right answer.

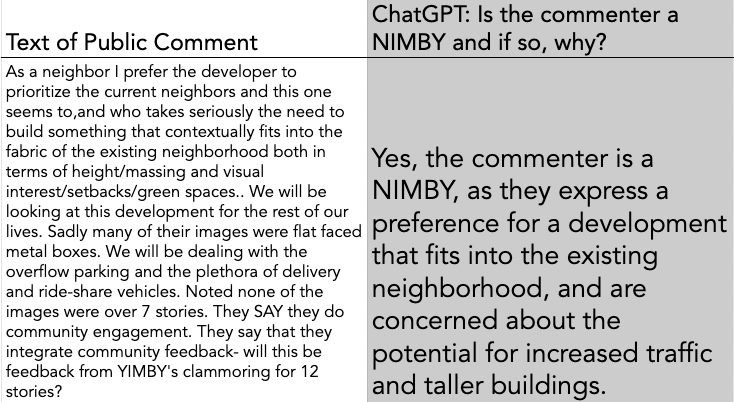

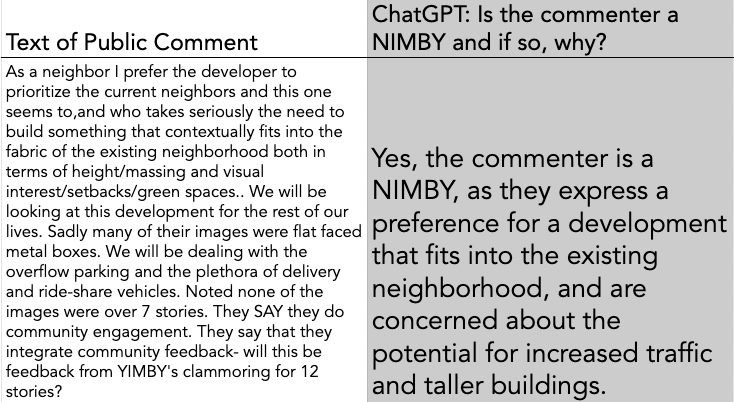

To push the system, I went a couple steps further until I finally felt like I was exceeding its capacity. For example, ChatGPT knows what a NIMBY is. It was able to identify comments that stressed concern for parking or neighborhood character as “NIMBY” comments whether the commenter was supporting or criticizing the proposed development team. I provided no definition of “NIMBY” but asked why it thought comments were NIMBY and its answers were often convincing. Similarly, it knows what a YIMBY is. It accurately categorized comments that were enthusiastic about more building, more density, less parking as YIMBY comments without any training from me.

As a side note, I recognize that the term “NIMBY” is used pejoratively to delegitimize concerns about neighborhood character. I would prefer to use a different term for this analysis but in this context there really is a strong split in the community between neighbors who are concerned about negative impacts on their own quality of life and others who are supportive of the potential of more intensive development to address the broader housing shortage in spite of possible impacts on immediate neighbors. My personal opinion is that certain aspects of neighborhood character are worthy of preservation and standing up for your neighborhood can be important even though I recognize that so often ‘neighborhood character’ is simply being used as a code for a desire to exclude on the basis of race and class. The AI model lumps racist NIMBYism together with any more enlightened neighborhood concerns – because this is how the term is most widely used online. It seems like it would be easy to provide the model with more nuanced, less loaded terms but I just didn’t take the time to do that for this experiment.

But whatever terminology we use, this is the defining conflict around the North Berkeley BART Station and so many other development projects. If the AI can sort the comments into two buckets, one for those who express concerns about impacts on current neighbors and one for those who express support for more building generally, it would be informative to then see how each development team scored within each of these two groupings. BART provided a tally of the overall average scoring for both teams and the development team that was ultimately selected was the top scorer (though, obviously many factors beyond these public surveys led to that choice). But did the YIMBYs and NIMBY’s score the two teams differently?

Unfortunately, this is where I think we exceed the capacity of the current tool. For comments with a clear YIMBY or NIMBY character, the AI was able to reliably identify that leaning and provide an accurate account for why each comment was either NIMBY or YIMBY. But the problem came when dealing with comments with no clear leaning (the majority of comments). Here, as with the blank comments, in the absence of clear evidence, the AI model simply makes up answers. It characterizes a positive comment about how the team included a homeless shelter operator as a NIMBY comment because the commenter is desiring to improve their neighborhood by housing the homeless! Submit the same comment again and the result flips and now it is considered YIMBY because it is supportive of the team which is proposing a development project and therefore the commenter must be pro-building. Because these large language AI models are based on probabilities, when the answer is not clear, the model rolls the dice to pick an answer. Ask again and it rolls again.

The result is that, while I think the YIMBY/NIMBY analysis is tantalizing and shows the potential of the technology to really open up very meaningful analysis of open ended public comments, I don’t think it can be relied upon for this today. Perhaps, more training (of either the AI or me the user) would enable a more reliable result. There may be ways to ask the model to evaluate these comments that would help it do a better job ignoring the comments that are unclear and focus only on those with clear agendas.

In spite of the limitations, I think that the technology shows incredible promise for unlocking the very real value that is often lost in detailed public comments. Everyone seems to agree that public engagement and input is important but, in part because it is so hard to digest these comments, public agencies often find themselves undertaking complex, expensive and time consuming engagement efforts that result in enormous files of essentially unreadable data. AI that can understand what people are trying to say can consolidate that information and transform it into a format that can more effectively influence public decision making.

Even with the current technology, it seems entirely practical to build a surveybot that would ask people for open ended comments on a development project and then summarized those comments and showed them to the user giving them a chance to correct any misunderstanding before submitting them. People have grown accustomed to the idea that their comments on surveys are mostly ignored but a survey that could highlight the most common themes among people’s open comments would be very valuable to policymakers.